DP-203 Practice Test Free – 50 Questions to Test Your Knowledge

Are you preparing for the DP-203 certification exam? If so, taking a DP-203 practice test free is one of the best ways to assess your knowledge and improve your chances of passing. In this post, we provide 50 free DP-203 practice questions designed to help you test your skills and identify areas for improvement.

By taking a free DP-203 practice test, you can:

- Familiarize yourself with the exam format and question types

- Identify your strengths and weaknesses

- Gain confidence before the actual exam

50 Free DP-203 Practice Questions

Below, you will find 50 free DP-203 practice questions to help you prepare for the exam. These questions are designed to reflect the real exam structure and difficulty level.

HOTSPOT - You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Sales.Orders. Sales.Orders contains a column named SalesRep. You plan to implement row-level security (RLS) for Sales.Orders. You need to create the security policy that will be used to implement RLS. The solution must ensure that sales representatives only see rows for which the value of the SalesRep column matches their username. How should you complete the code? To answer, select the appropriate options in the answer area.

You have a Log Analytics workspace named la1 and an Azure Synapse Analytics dedicated SQL pool named Pool1. Pool1 sends logs to la1. You need to identify whether a recently executed query on Pool1 used the result set cache. What are two ways to achieve the goal? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A. Review the sys.dm_pdw_sql_requests dynamic management view in Pool1.

B. Review the sys.dm_pdw_exec_requests dynamic management view in Pool1.

C. Use the Monitor hub in Synapse Studio.

D. Review the AzureDiagnostics table in la1.

E. Review the sys.dm_pdw_request_steps dynamic management view in Pool1.

You have an Azure subscription that contains an Azure Synapse Analytics workspace and a user named User1. You need to ensure that User1 can review the Azure Synapse Analytics database templates from the gallery. The solution must follow the principle of least privilege. Which role should you assign to User1?

A. Storage Blob Data Contributor.

B. Synapse Administrator

C. Synapse Contributor

D. Synapse User

You manage an enterprise data warehouse in Azure Synapse Analytics. Users report slow performance when they run commonly used queries. Users do not report performance changes for infrequently used queries. You need to monitor resource utilization to determine the source of the performance issues. Which metric should you monitor?

A. DWU percentage

B. Cache hit percentage

C. DWU limit

D. Data Warehouse Units (DWU) used

HOTSPOT - You have an Azure data factory. You execute a pipeline that contains an activity named Activity1. Activity1 produces the following output.For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

A company purchases IoT devices to monitor manufacturing machinery. The company uses an Azure IoT Hub to communicate with the IoT devices. The company must be able to monitor the devices in real-time. You need to design the solution. What should you recommend?

A. Azure Analysis Services using Azure Portal

B. Azure Stream Analytics Edge application using Microsoft Visual Studio

C. Azure Analysis Services using Azure PowerShell

D. Azure Analysis Services using Microsoft Visual Studio

You have an Azure Synapse Analytics dedicated SQL pool named pool1. You need to perform a monthly audit of SQL statements that affect sensitive data. The solution must minimize administrative effort. What should you include in the solution?

A. workload management

B. sensitivity labels

C. dynamic data masking

D. Microsoft Defender for SQL

A company purchases IoT devices to monitor manufacturing machinery. The company uses an Azure IoT Hub to communicate with the IoT devices. The company must be able to monitor the devices in real-time. You need to design the solution. What should you recommend?

A. Azure Analysis Services using Microsoft Visual Studio

B. Azure Data Factory instance using Azure PowerShell

C. Azure Analysis Services using Azure PowerShell

D. Azure Stream Analytics cloud job using Azure Portal

You have several Azure Data Factory pipelines that contain a mix of the following types of activities: • Power Query • Notebook • Copy • Jar Which two Azure services should you use to debug the activities? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Azure Machine Learning

B. Azure Data Factory

C. Azure Synapse Analytics

D. Azure HDInsight

E. Azure Databricks

You have an Azure Synapse Analytics dedicated SQL pool named Pool1. Pool1 contains a fact table named Table1. You need to identify the extent of the data skew in Table1. What should you do in Synapse Studio?

A. Connect to the built-in pool and query sys.dm_pdw_nodes_db_partition_stats.

B. Connect to Pool1 and run DBCC PDW_SHOWSPACEUSED.

C. Connect to Pool1 and query sys.dm_pdw_node_status.

D. Connect to the built-in pool and query sys.dm_pdw_sys_info.

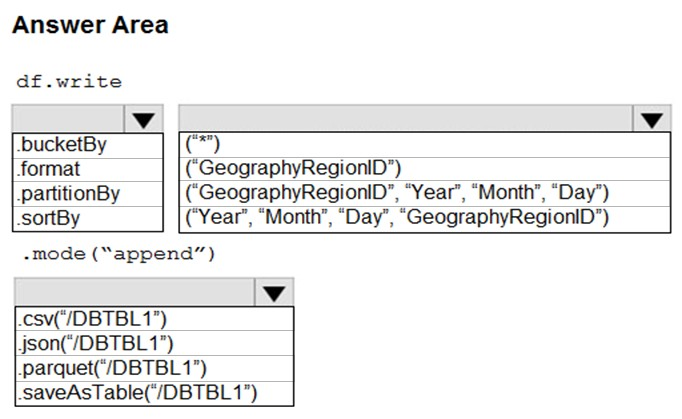

HOTSPOT - You develop a dataset named DBTBL1 by using Azure Databricks. DBTBL1 contains the following columns: ✑ SensorTypeID ✑ GeographyRegionID ✑ Year ✑ Month ✑ Day ✑ Hour ✑ Minute ✑ Temperature ✑ WindSpeed ✑ Other You need to store the data to support daily incremental load pipelines that vary for each GeographyRegionID. The solution must minimize storage costs. How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You have an Azure data factory named DF1. DF1 contains a single pipeline that is executed by using a schedule trigger. From Diagnostics settings, you configure pipeline runs to be sent to a resource-specific destination table in a Log Analytics workspace. You need to run KQL queries against the table. Which table should you query?

A. ADFPipelineRun

B. ADFTriggerRun

C. ADFActivityRun

D. AzureDiagnostics

You have a data warehouse in Azure Synapse Analytics. You need to ensure that the data in the data warehouse is encrypted at rest. What should you enable?

A. Advanced Data Security for this database

B. Transparent Data Encryption (TDE)

C. Secure transfer required

D. Dynamic Data Masking

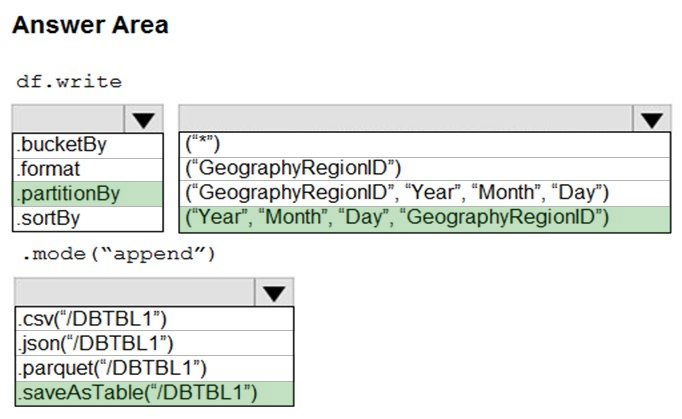

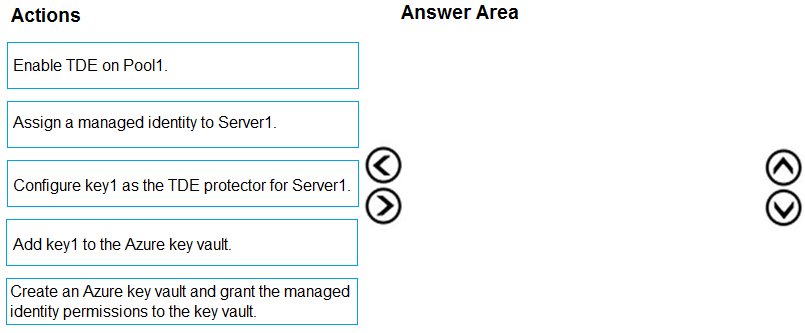

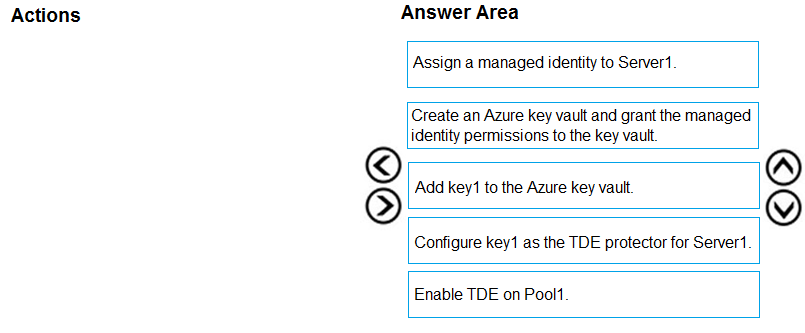

DRAG DROP - You have an Azure Synapse Analytics SQL pool named Pool1 on a logical Microsoft SQL server named Server1. You need to implement Transparent Data Encryption (TDE) on Pool1 by using a custom key named key1. Which five actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. Select and Place:

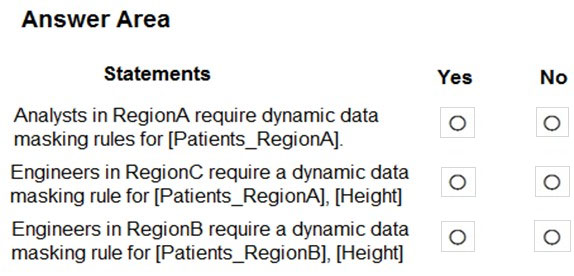

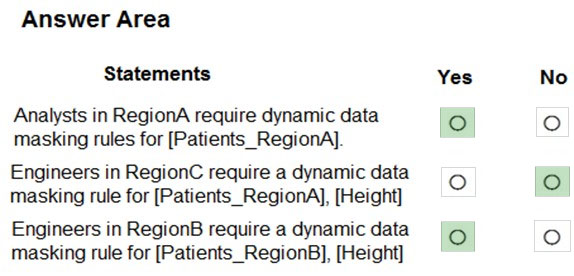

HOTSPOT - You are designing an Azure Synapse Analytics dedicated SQL pool. Groups will have access to sensitive data in the pool as shown in the following table.You have policies for the sensitive data. The policies vary be region as shown in the following table.

You have a table of patients for each region. The tables contain the following potentially sensitive columns.

You are designing dynamic data masking to maintain compliance. For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point. Hot Area:

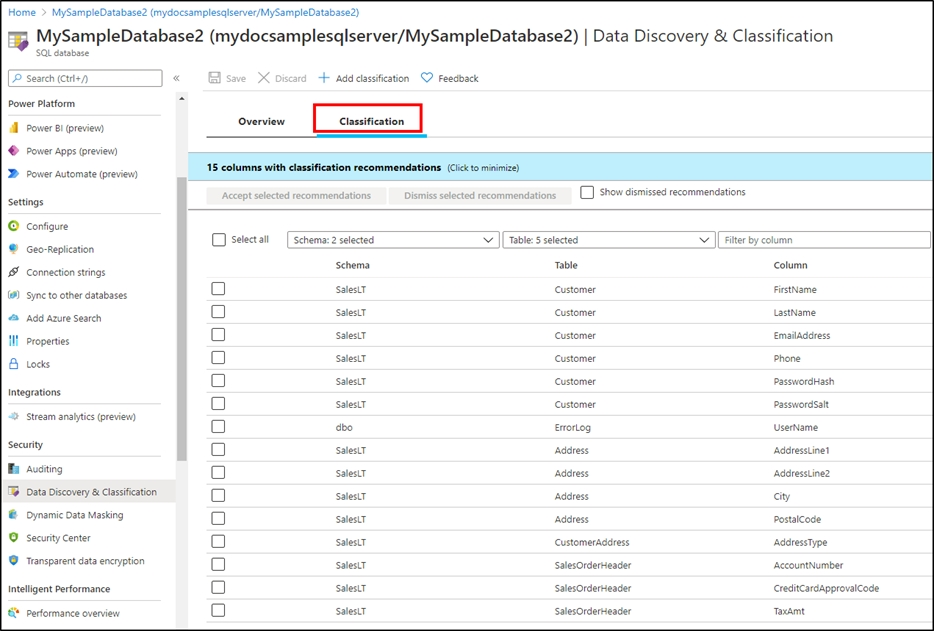

You are designing an Azure Synapse Analytics dedicated SQL pool. You need to ensure that you can audit access to Personally Identifiable Information (PII). What should you include in the solution?

A. column-level security

B. dynamic data masking

C. row-level security (RLS)

D. sensitivity classifications

You have an Azure Data Factory version 2 (V2) resource named Df1. Df1 contains a linked service. You have an Azure Key vault named vault1 that contains an encryption key named key1. You need to encrypt Df1 by using key1. What should you do first?

A. Add a private endpoint connection to vault1.

B. Enable Azure role-based access control on vault1.

C. Remove the linked service from Df1.

D. Create a self-hosted integration runtime.

You develop data engineering solutions for a company. A project requires the deployment of data to Azure Data Lake Storage. You need to implement role-based access control (RBAC) so that project members can manage the Azure Data Lake Storage resources. Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Create security groups in Azure Active Directory (Azure AD) and add project members.

B. Configure end-user authentication for the Azure Data Lake Storage account.

C. Assign Azure AD security groups to Azure Data Lake Storage.

D. Configure Service-to-service authentication for the Azure Data Lake Storage account.

E. Configure access control lists (ACL) for the Azure Data Lake Storage account.

You are designing an enterprise data warehouse in Azure Synapse Analytics that will contain a table named Customers. Customers will contain credit card information. You need to recommend a solution to provide salespeople with the ability to view all the entries in Customers. The solution must prevent all the salespeople from viewing or inferring the credit card information. What should you include in the recommendation?

A. data masking

B. Always Encrypted

C. column-level security

D. row-level security

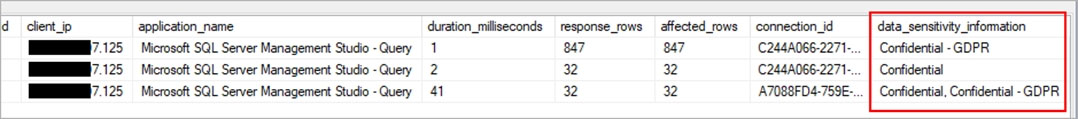

You plan to create an Azure Synapse Analytics dedicated SQL pool. You need to minimize the time it takes to identify queries that return confidential information as defined by the company's data privacy regulations and the users who executed the queues. Which two components should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. sensitivity-classification labels applied to columns that contain confidential information

B. resource tags for databases that contain confidential information

C. audit logs sent to a Log Analytics workspace

D. dynamic data masking for columns that contain confidential information

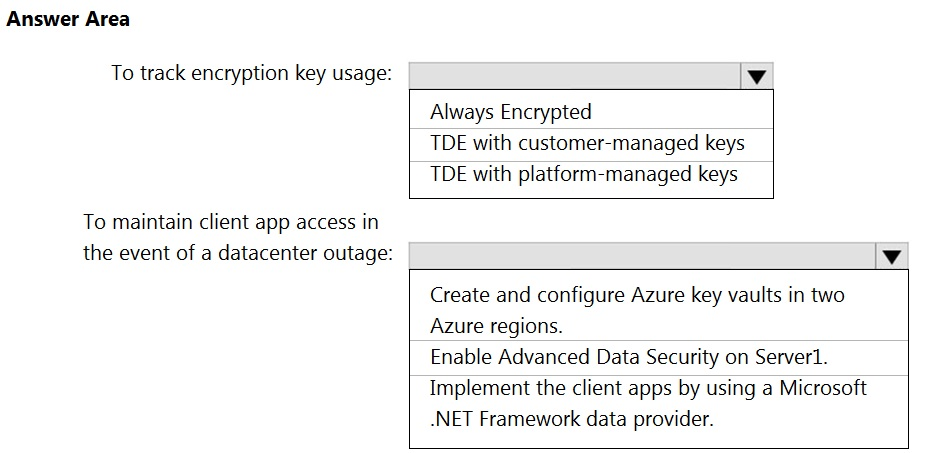

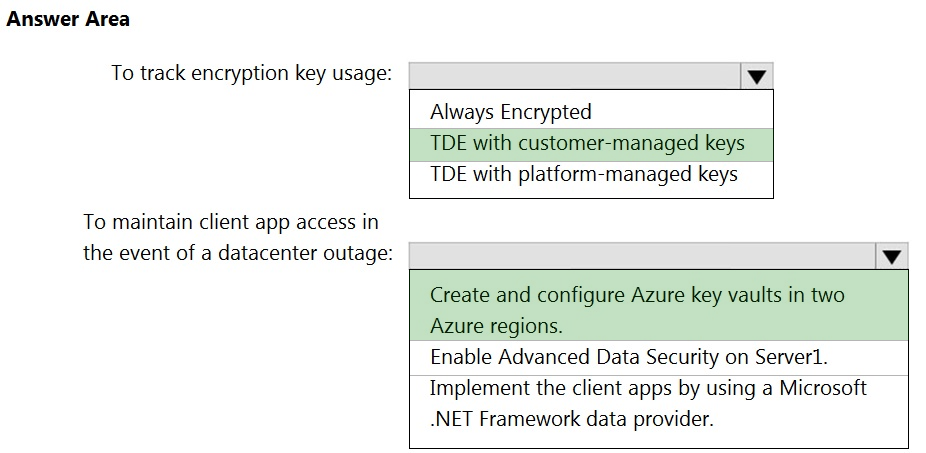

HOTSPOT - You have an Azure subscription that contains a logical Microsoft SQL server named Server1. Server1 hosts an Azure Synapse Analytics SQL dedicated pool named Pool1. You need to recommend a Transparent Data Encryption (TDE) solution for Server1. The solution must meet the following requirements: ✑ Track the usage of encryption keys. Maintain the access of client apps to Pool1 in the event of an Azure datacenter outage that affects the availability of the encryption keys.What should you include in the recommendation? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

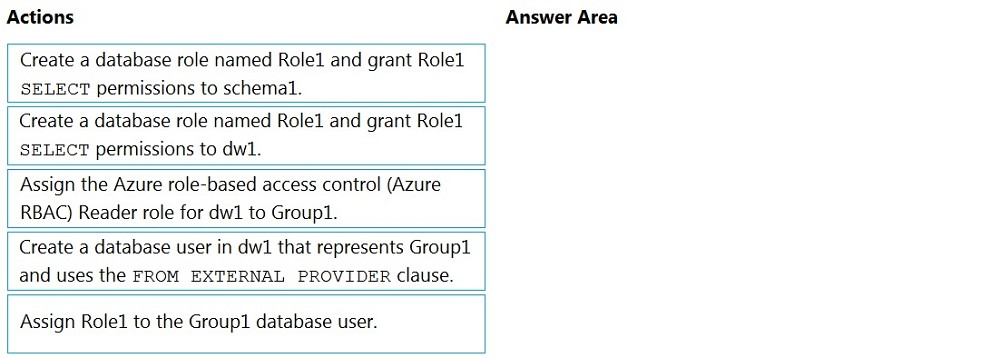

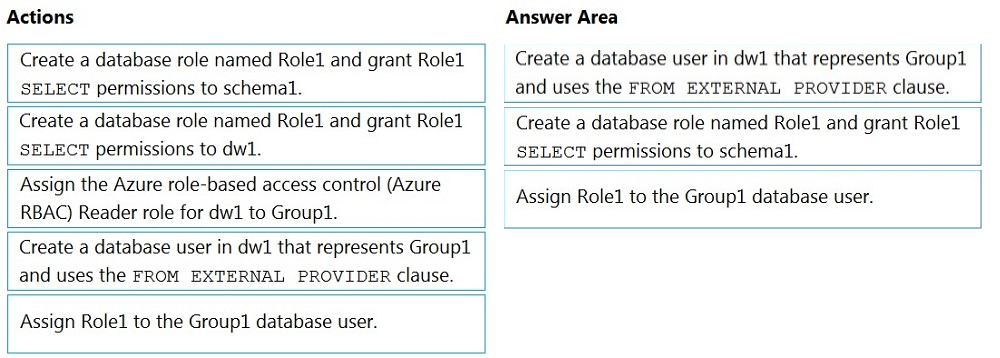

DRAG DROP - You have an Azure Active Directory (Azure AD) tenant that contains a security group named Group1. You have an Azure Synapse Analytics dedicated SQL pool named dw1 that contains a schema named schema1. You need to grant Group1 read-only permissions to all the tables and views in schema1. The solution must use the principle of least privilege. Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select. Select and Place:

You have an Azure Stream Analytics job named Job1. The metrics of Job1 from the last hour are shown in the following table.The late arrival tolerance for Job1 is set to five seconds. You need to optimize Job1. Which two actions achieve the goal? Each correct answer presents a complete solution. NOTE: Each correct answer is worth one point.

A. Increase the number of SUs.

B. Parallelize the query.

C. Resolve errors in output processing.

D. Resolve errors in input processing.

You have an Azure subscription that contains an Azure Synapse Analytics dedicated SQL pool named Pool1. You need to monitor Pool1. The solution must ensure that you capture the start and end times of each query completed in Pool1. Which diagnostic setting should you use?

A. Sql Requests

B. Request Steps

C. Dms Workers

D. Exec Requests

You have an Azure Data Lake Storage Gen2 account named adls2 that is protected by a virtual network. You are designing a SQL pool in Azure Synapse that will use adls2 as a source. What should you use to authenticate to adls2?

A. an Azure Active Directory (Azure AD) user

B. a shared key

C. a shared access signature (SAS)

D. a managed identity

You have an Azure data factory named DF1. DF1 contains a pipeline that has five activities. You need to monitor queue times across the activities by using Log Analytics. What should you do in DF1?

A. Connect DF1 to a Microsoft Purview account.

B. Add a diagnostic setting that sends activity runs to a Log Analytics workspace.

C. Enable auto refresh for the Activity Logs Insights workbook.

D. Add a diagnostic setting that sends pipeline runs to a Log Analytics workspace.

You have a SQL pool in Azure Synapse that contains a table named dbo.Customers. The table contains a column name Email. You need to prevent nonadministrative users from seeing the full email addresses in the Email column. The users must see values in a format of [email protected] instead. What should you do?

A. From Microsoft SQL Server Management Studio, set an email mask on the Email column.

B. From the Azure portal, set a mask on the Email column.

C. From Microsoft SQL Server Management Studio, grant the SELECT permission to the users for all the columns in the dbo.Customers table except Email.

D. From the Azure portal, set a sensitivity classification of Confidential for the Email column.

You have an Azure subscription that contains an Azure Synapse Analytics workspace name workspace1, workspace1 contains an Azure Synapse Analytics dedicated SQL pool named Pool1. You create a mapping data flow in an Azure Synapse pipeline that writes data to Pool1. You execute the data flow and capture the execution information. You need to identify how long it takes to write the data to Pool1. Which metric should you use?

A. the rows written

B. the sink processing time

C. the transformation processing time

D. the post processing time

You are designing a security model for an Azure Synapse Analytics dedicated SQL pool that will support multiple companies. You need to ensure that users from each company can view only the data of their respective company. Which two objects should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. a security policy

B. a custom role-based access control (RBAC) role

C. a predicate function

D. a column encryption key

E. asymmetric keys

HOTSPOT - You have an Azure Synapse Analytics dedicated SQL pool named sqlpool1 that contains a table named Sales1. Each row in the Sales table contains regional sales data and a field that lists the username of a sales analyst. You need to configure row-level security (RLS) to ensure that the analysts can view only the rows containing their respective data. What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You have an Azure subscription linked to an Azure Active Directory (Azure AD) tenant that contains a service principal named ServicePrincipal1. The subscription contains an Azure Data Lake Storage account named adls1. Adls1 contains a folder named Folder2 that has a URI of https://adls1.dfs.core.windows.net/ container1/Folder1/Folder2/. ServicePrincipal1 has the access control list (ACL) permissions shown in the following table.You need to ensure that ServicePrincipal1 can perform the following actions: ✑ Traverse child items that are created in Folder2. ✑ Read files that are created in Folder2. The solution must use the principle of least privilege. Which two permissions should you grant to ServicePrincipal1 for Folder2? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Access ג€” Read

B. Access ג€” Write

C. Access ג€” Execute

D. Default ג€” Read

E. Default ג€” Write

F. Default ג€” Execute

You are designing database for an Azure Synapse Analytics dedicated SQL pool to support workloads for detecting ecommerce transaction fraud. Data will be combined from multiple ecommerce sites and can include sensitive financial information such as credit card numbers. You need to recommend a solution that meets the following requirements: Users must be able to identify potentially fraudulent transactions.✑ Users must be able to use credit cards as a potential feature in models. ✑ Users must NOT be able to access the actual credit card numbers. What should you include in the recommendation?

A. Transparent Data Encryption (TDE)

B. row-level security (RLS)

C. column-level encryption

D. Azure Active Directory (Azure AD) pass-through authentication

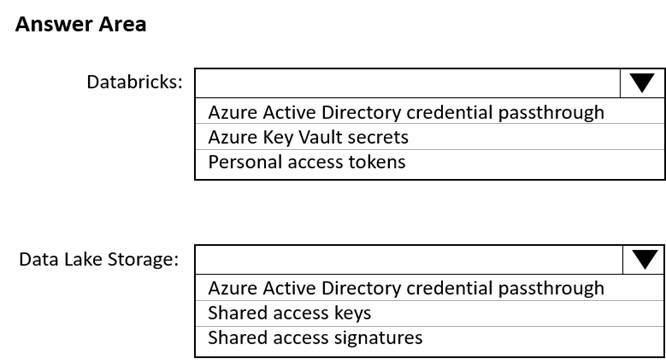

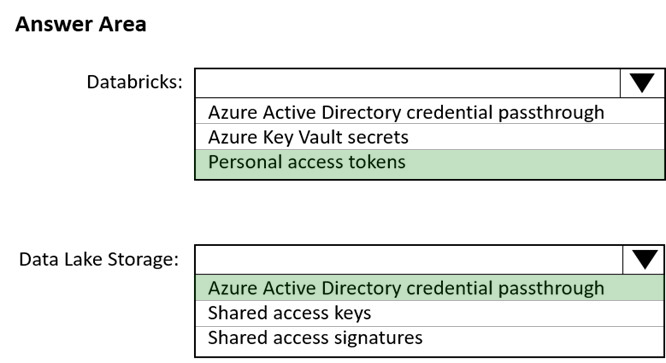

HOTSPOT - You use Azure Data Lake Storage Gen2 to store data that data scientists and data engineers will query by using Azure Databricks interactive notebooks. Users will have access only to the Data Lake Storage folders that relate to the projects on which they work. You need to recommend which authentication methods to use for Databricks and Data Lake Storage to provide the users with the appropriate access. The solution must minimize administrative effort and development effort. Which authentication method should you recommend for each Azure service? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You are developing an application that uses Azure Data Lake Storage Gen2. You need to recommend a solution to grant permissions to a specific application for a limited time period. What should you include in the recommendation?

A. role assignments

B. shared access signatures (SAS)

C. Azure Active Directory (Azure AD) identities

D. account keys

You are designing an Azure Synapse solution that will provide a query interface for the data stored in an Azure Storage account. The storage account is only accessible from a virtual network. You need to recommend an authentication mechanism to ensure that the solution can access the source data. What should you recommend?

A. a managed identity

B. anonymous public read access

C. a shared key

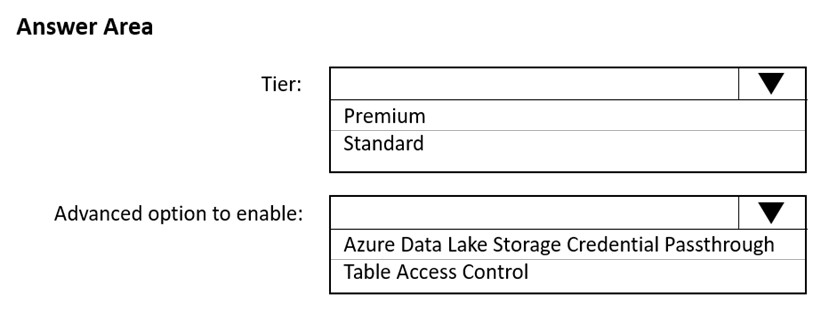

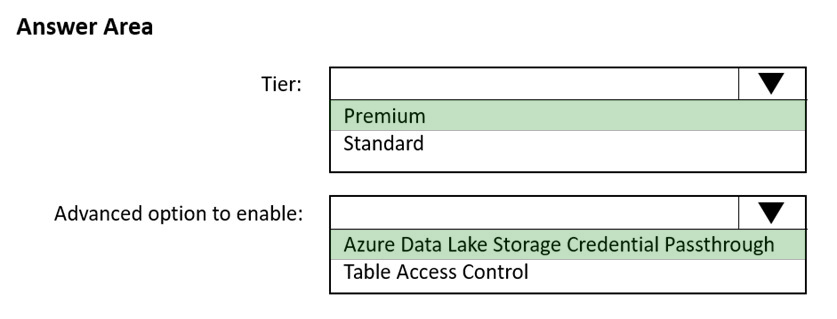

HOTSPOT - You need to implement an Azure Databricks cluster that automatically connects to Azure Data Lake Storage Gen2 by using Azure Active Directory (Azure AD) integration. How should you configure the new cluster? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

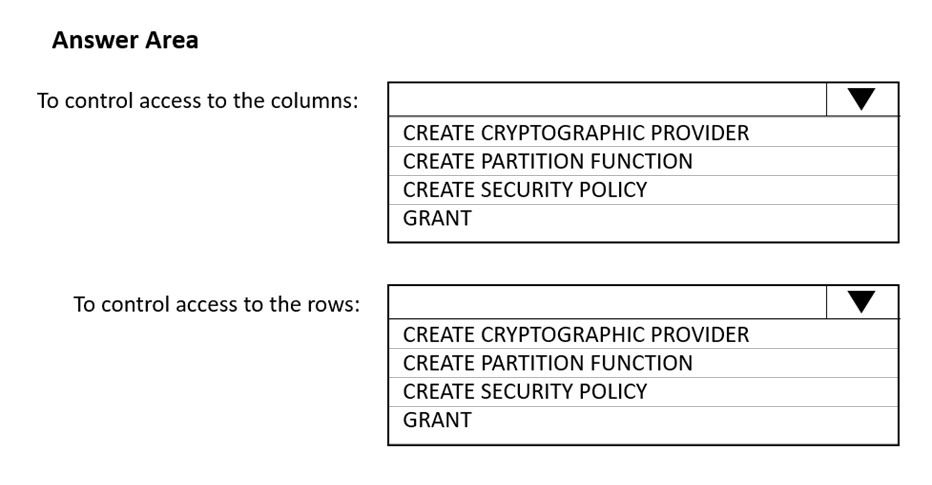

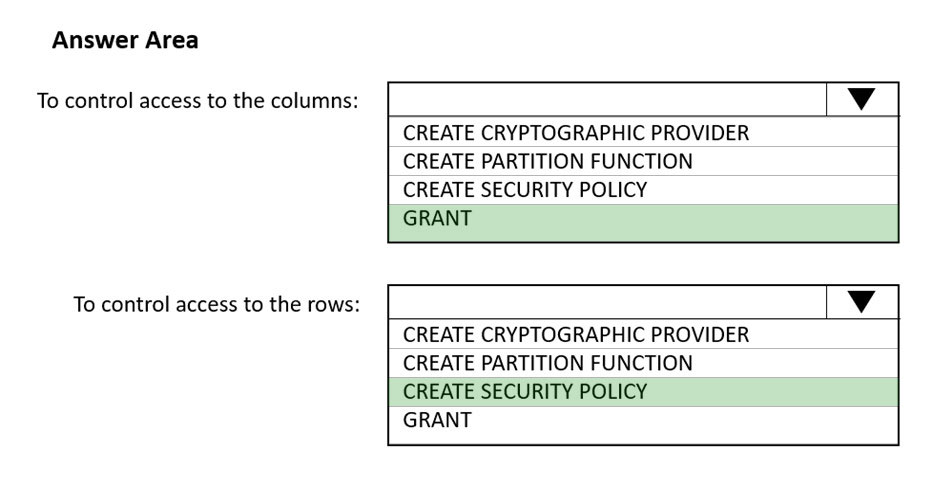

HOTSPOT - You have an Azure Synapse Analytics SQL pool named Pool1. In Azure Active Directory (Azure AD), you have a security group named Group1. You need to control the access of Group1 to specific columns and rows in a table in Pool1. Which Transact-SQL commands should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You need to schedule an Azure Data Factory pipeline to execute when a new file arrives in an Azure Data Lake Storage Gen2 container. Which type of trigger should you use?

A. on-demand

B. tumbling window

C. schedule

D. event

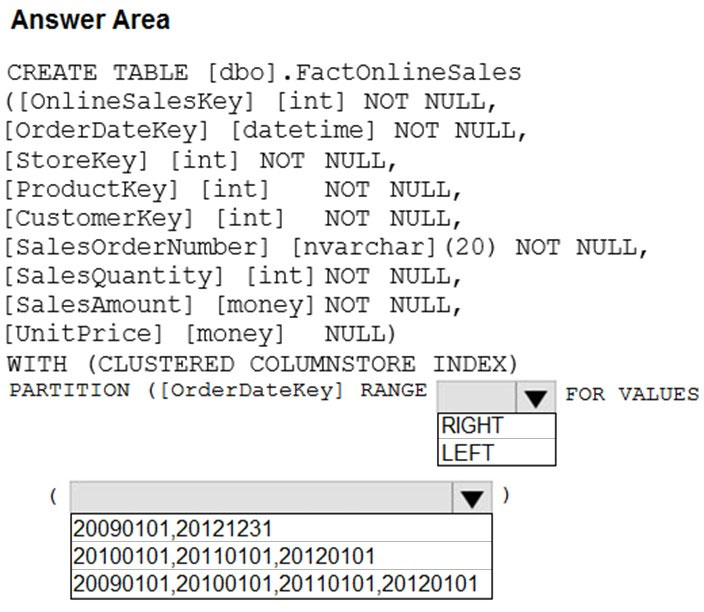

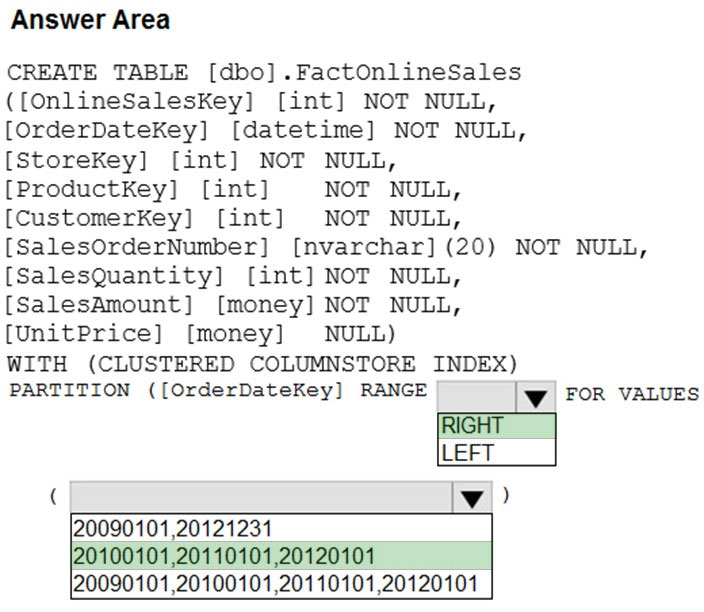

HOTSPOT - You have an enterprise data warehouse in Azure Synapse Analytics that contains a table named FactOnlineSales. The table contains data from the start of 2009 to the end of 2012. You need to improve the performance of queries against FactOnlineSales by using table partitions. The solution must meet the following requirements: ✑ Create four partitions based on the order date. ✑ Ensure that each partition contains all the orders placed during a given calendar year. How should you complete the T-SQL command? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 that contains a table named Sales. Sales has row-level security (RLS) applied. RLS uses the following predicate filter.A user named SalesUser1 is assigned the db_datareader role for Pool1. Which rows in the Sales table are returned when SalesUser1 queries the table?

A. only the rows for which the value in the User_Name column is SalesUser1

B. all the rows

C. only the rows for which the value in the SalesRep column is Manager

D. only the rows for which the value in the SalesRep column is SalesUser1

You are designing a statistical analysis solution that will use custom proprietary Python functions on near real-time data from Azure Event Hubs. You need to recommend which Azure service to use to perform the statistical analysis. The solution must minimize latency. What should you recommend?

A. Azure Synapse Analytics

B. Azure Databricks

C. Azure Stream Analytics

D. Azure SQL Database

DRAG DROP - You have an Azure Data Lake Storage Gen 2 account named storage1. You need to recommend a solution for accessing the content in storage1. The solution must meet the following requirements: • List and read permissions must be granted at the storage account level. • Additional permissions can be applied to individual objects in storage1. • Security principals from Microsoft Azure Active Directory (Azure AD), part of Microsoft Entra, must be used for authentication. What should you use? To answer, drag the appropriate components to the correct requirements. Each component may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

You have an Azure Synapse Analytics dedicated SQL pool named SQL1 and a user named User1. You need to ensure that User1 can view requests associated with SQL1 by querying the sys.dm_pdw_exec_requests dynamic management view. The solution must follow the principle of least privilege. Which permission should you grant to User1?

A. VIEW DATABASE STATE

B. SHOWPLAN

C. CONTROL SERVER

D. VIEW ANY DATABASE

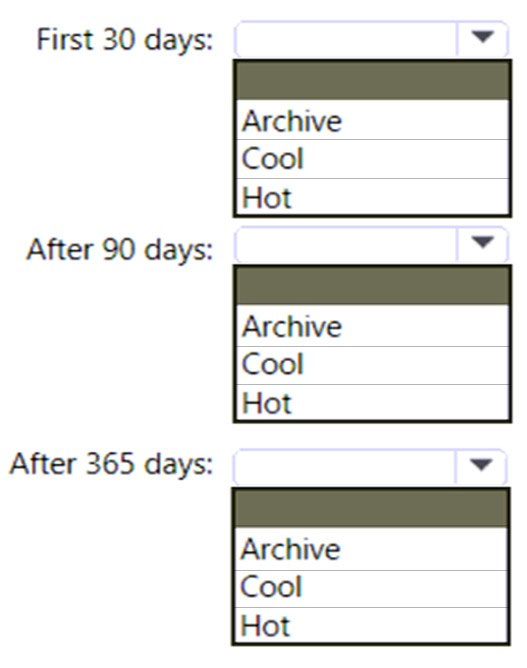

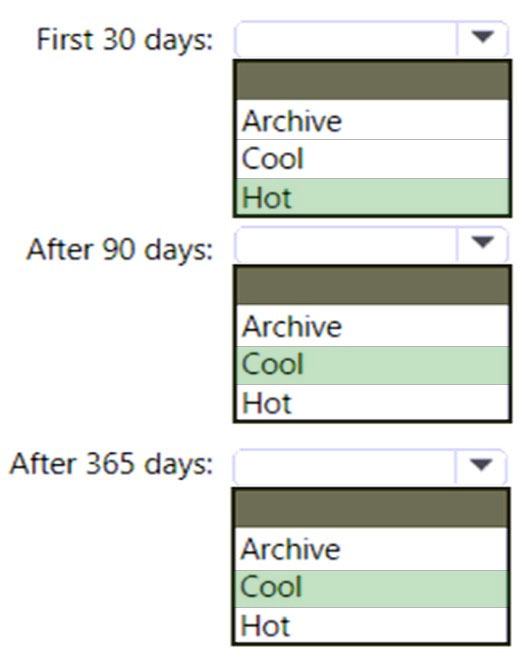

HOTSPOT - You are designing an application that will use an Azure Data Lake Storage Gen 2 account to store petabytes of license plate photos from toll booths. The account will use zone-redundant storage (ZRS). You identify the following usage patterns: * The data will be accessed several times a day during the first 30 days after the data is created. The data must meet an availability SLA of 99.9%. * After 90 days, the data will be accessed infrequently but must be available within 30 seconds. * After 365 days, the data will be accessed infrequently but must be available within five minutes. You need to recommend a data retention solution. The solution must minimize costs. Which access tier should you recommend for each time frame? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You have a tenant in Microsoft Azure Active Directory (Azure AD), part of Microsoft Entra. The tenant contains a group named Group1. You have an Azure subscription that contains the resources shown in the following table.You need to ensure that members of Group1 can read CSV files from storage1 by using the OPENROWSET function. The solution must meet the following requirements: • The members of Group1 must use credential1 to access storage1. • The principle of least privilege must be followed. Which permission should you grant to Group1?

A. EXECUTE

B. CONTROL

C. REFERENCES

D. SELECT

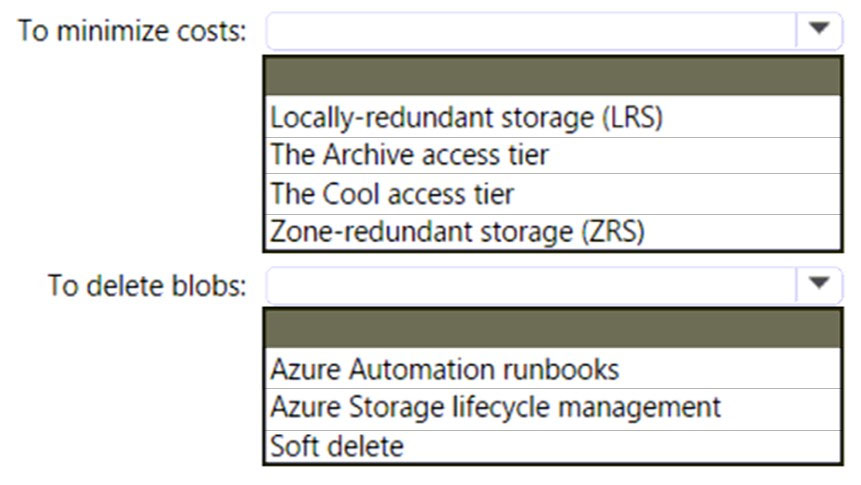

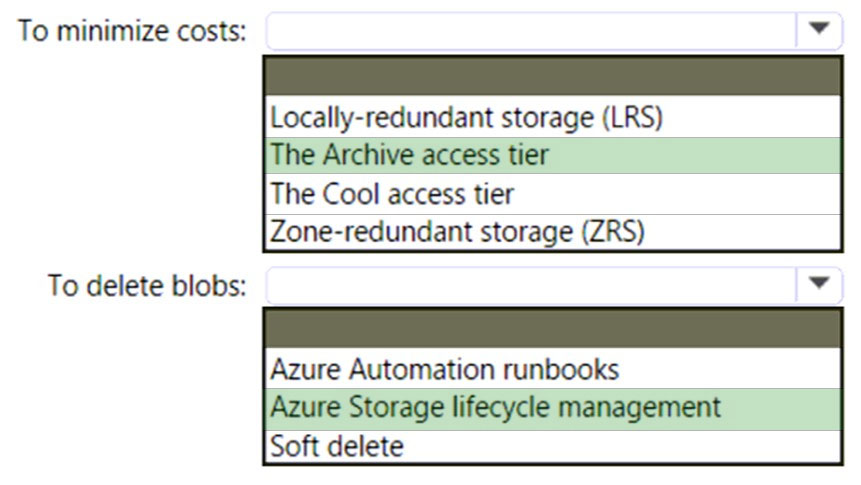

HOTSPOT - You have an Azure subscription. You need to deploy an Azure Data Lake Storage Gen2 Premium account. The solution must meet the following requirements: * Blobs that are older than 365 days must be deleted. * Administrative effort must be minimized. * Costs must be minimized. What should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

HOTSPOT - You have an Azure subscription that contains an Azure Data Lake Storage account. The storage account contains a data lake named DataLake1. You plan to use an Azure data factory to ingest data from a folder in DataLake1, transform the data, and land the data in another folder. You need to ensure that the data factory can read and write data from any folder in the DataLake1 container. The solution must meet the following requirements: • Minimize the risk of unauthorized user access. • Use the principle of least privilege. • Minimize maintenance effort. How should you configure access to the storage account for the data factory? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

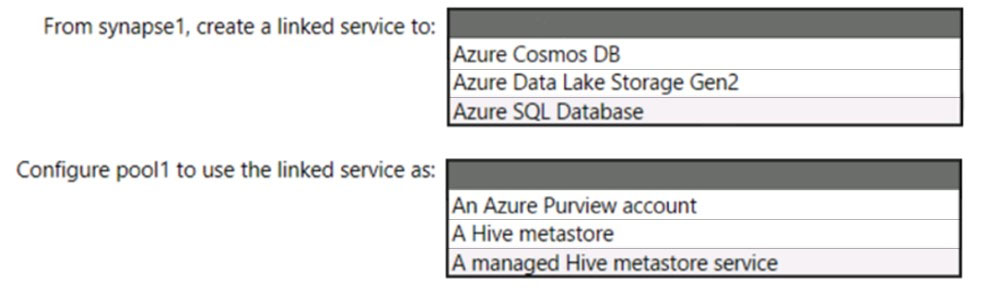

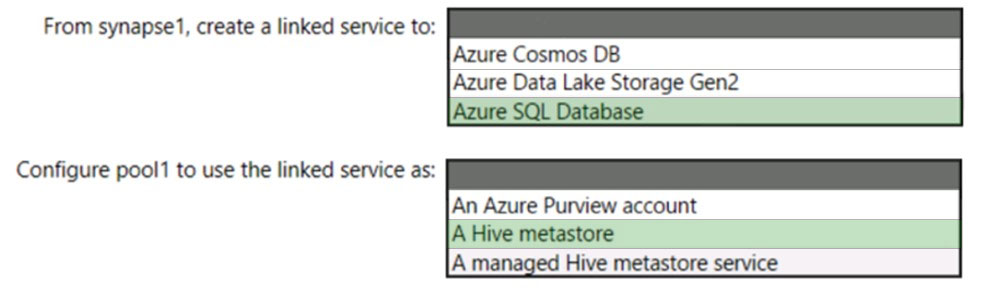

HOTSPOT - You have an Azure subscription that contains an Azure Databricks workspace named databricks1 and an Azure Synapse Analytics workspace named synapse1. The synapse1 workspace contains an Apache Spark pool named pool1. You need to share an Apache Hive catalog of pool1 with databricks1. What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You have an Azure Synapse Analytics dedicated SQL pool. You need to ensure that data in the pool is encrypted at rest. The solution must NOT require modifying applications that query the data. What should you do?

A. Enable encryption at rest for the Azure Data Lake Storage Gen2 account.

B. Enable Transparent Data Encryption (TDE) for the pool.

C. Use a customer-managed key to enable double encryption for the Azure Synapse workspace.

D. Create an Azure key vault in the Azure subscription grant access to the pool.

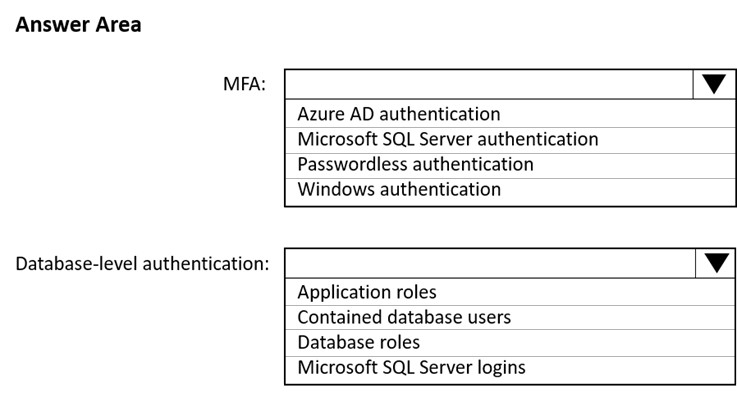

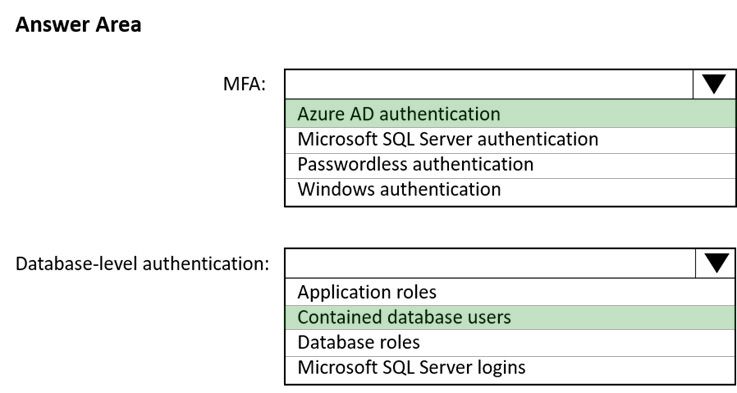

HOTSPOT - You have an Azure subscription that is linked to a hybrid Azure Active Directory (Azure AD) tenant. The subscription contains an Azure Synapse Analytics SQL pool named Pool1. You need to recommend an authentication solution for Pool1. The solution must support multi-factor authentication (MFA) and database-level authentication. Which authentication solution or solutions should you include in the recommendation? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

Get More DP-203 Practice Questions

If you’re looking for more DP-203 practice test free questions, click here to access the full DP-203 practice test.

We regularly update this page with new practice questions, so be sure to check back frequently.

Good luck with your DP-203 certification journey!